Joyce Yi

December 15, 2025

Measuring the ROI of Marketing AI

A clear value framework to stop reporting on usage and start measuring outcomes.

We’re in the early phase of a new computing cycle, and companies are doing what they always do at the start of one: tripping over their own feet.

Every few months, there’s a new provocative headline claiming AI is “failing.” The recent provocative headline was MIT saying 95% of AI pilots fall apart. The claim spread because it confirmed a fear, not because it held up under real review.

Our 2025 State of AI in Marketing report shows the same pattern at scale: most teams are adopting AI, but only a smaller share can show consistent, measurable value.

The truth is simpler: Most teams aren’t failing at AI. They’re failing at deploying anything new, just like past tech cycles. Cloud, mobile, and the early internet all went through the same slow grind. AI is no exception.

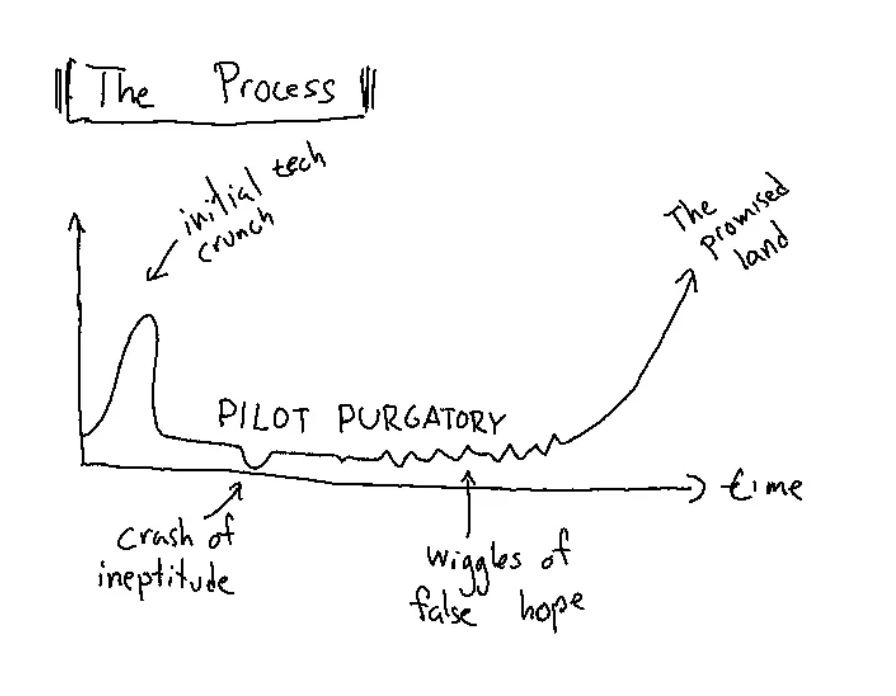

Here’s the sketch I show leaders on many calls because it explains the pattern better than words can:

Everyone starts with a spike of hope, hits the crash, then drifts in pilot purgatory. A few fight their way out. The rest stay stuck because they can’t show progress early enough to justify moving forward.

At the end of the day, the teams that break out of that slump are the ones focused on outcomes, not tasks.

This is why measurement matters. Not as a reporting chore, but as the only way to prove AI isn’t just theater.

Most teams collect the wrong signals. They track hours saved with no financial link. They report usage but not outcomes. They run pilot after pilot with no shared baseline. Eventually, leaders lose patience, and the program stalls.

A clear value framework fixes this. It gives teams a way to show what’s real. At Jasper, we’ve seen that when marketing teams anchor around the right mix of metrics—near-term signals tied to long-term value—the noise drops and the path forward becomes clear.

Below is the version we use.

The three places where marketing AI shows real ROI

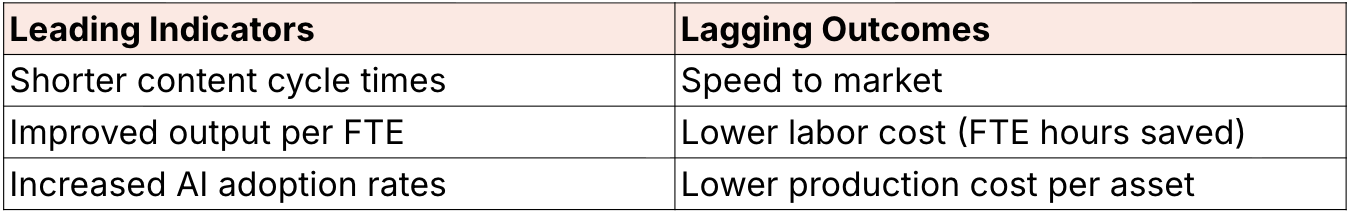

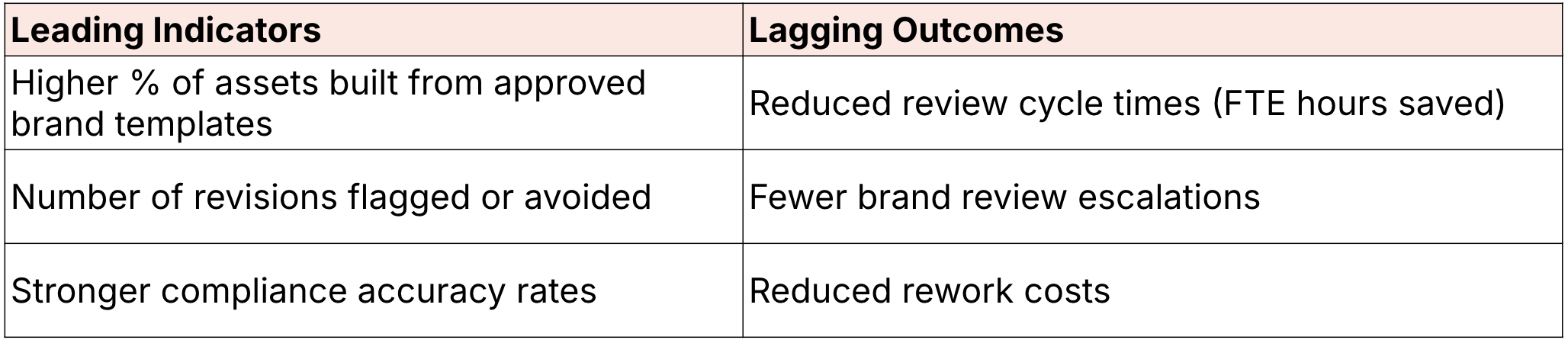

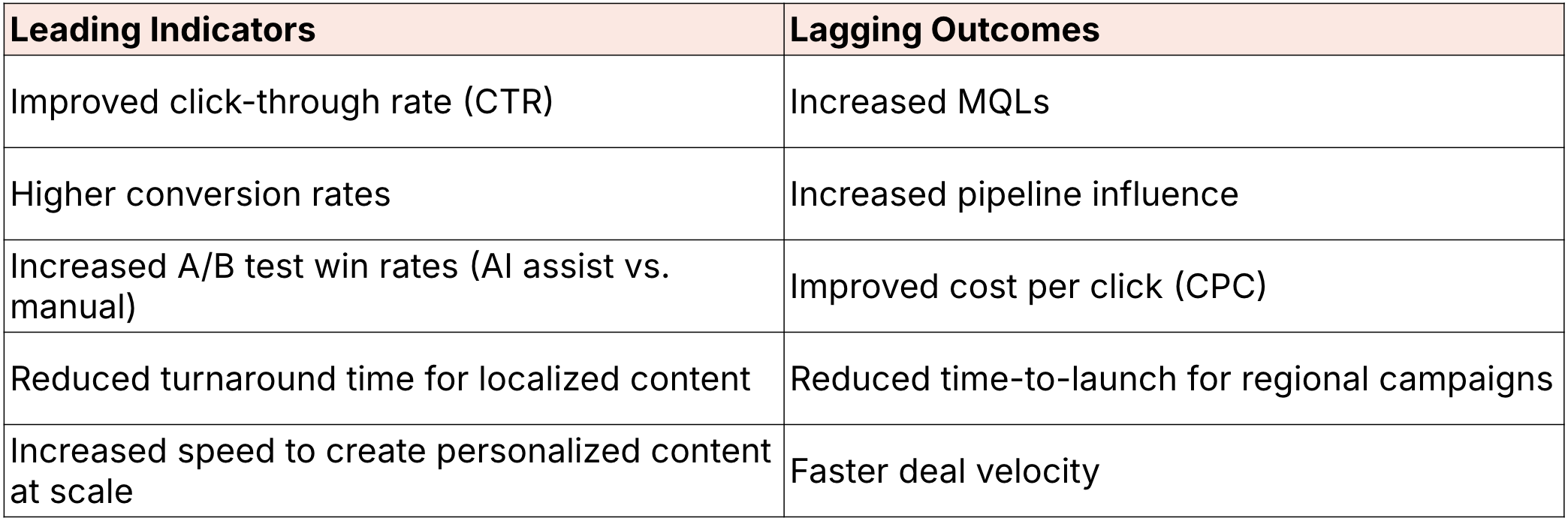

AI outcomes become measurable when early signals (leading indicators) tie cleanly to business value (lagging outcomes). This is the only way to move past “cool demo” territory.

1. Reduce operating cost and complexity

The first path to ROI is usually speed.

Automating slow steps in the content lifecycle—brief creation, first drafts, campaign setup—helps teams do more with the same headcount. This isn’t about squeezing people; it’s about removing the drag.

In real use:

For a leading indicator, Webster First Federal Credit Union cut their content cycle time with Jasper. They reduced blog production time by 93% and shipped 4× more content without adding headcount.

For a lagging indicator, Cushman & Wakefield freed 10,000+ hours a year by automating proposal and marketing content. Output went up 50%, and the team lowered the overall cost of producing assets.

2. Protect brand integrity

As teams scale content, brand control gets messy fast. More channels, more contributors, more ways things drift. AI helps keep standards tight—tone, templates, compliance—without slowing people down.

In real use:

Old Dominion Freight Line trained Jasper on industry language and rules so new staff could ramp faster and stay consistent. Volume increased without tradeoffs.

3. Drive revenue growth

This is the upside most leaders care about.

When teams ship better content faster, and personalize without bottlenecks, the sales pipeline moves.

In real use:

VertoDigital cut time-to-market by 50%, a clear lagging signal that their production engine sped up.

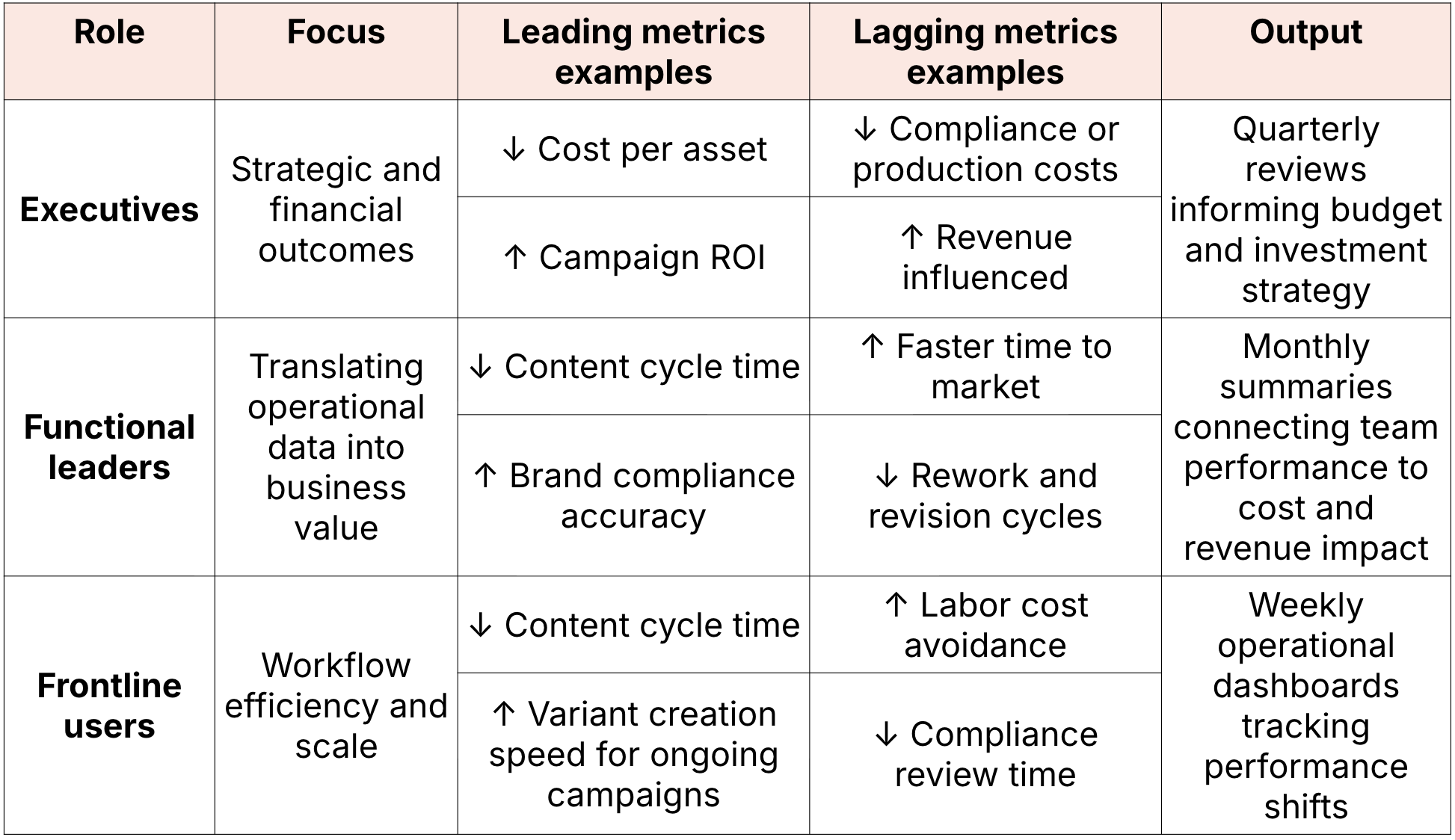

A role-based way to track outcomes

ROI only sticks when everyone—not just leadership or centralized AI labs—knows what to track and why. Each group owns a slice of the signal.

The reporting rhythm changes depending on the level, weekly dashboards for frontline teams, monthly roll ups for managers, quarterly reviews for leadership.

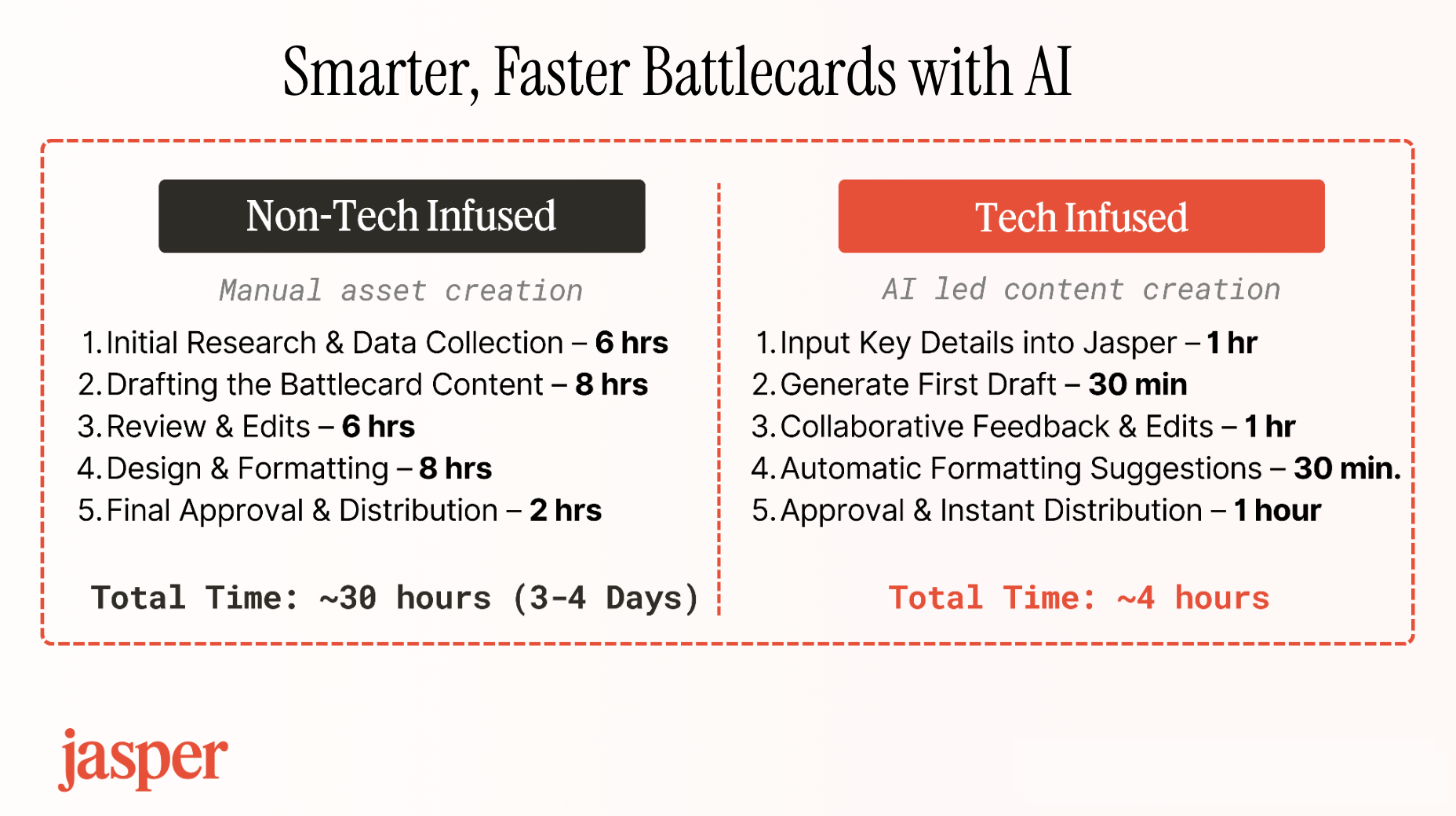

Start with a baseline (most don’t)

You can’t prove improvement without a before. Most teams skip this, then struggle to explain where value came from.

Do this before rolling AI into workflows:

- Pick one or two core processes to evaluate

- Capture cycle times, output per FTE, and quality markers

- Make sure data collection is clean and repeatable

- Roll out new workflow or use case and measure the outcome shift

If the baseline is tight, the difference will be clear and credible. Leaders trust what they can see side-by-side.

For example, here's how a baseline for manual battlecard creation compares to AI-led content creation:

The crawl → walk → run path to AI maturity

Teams that jump straight to “run” mode usually crash. AI maturity is built in stages, just like previous computing eras.

- Crawl: Focus on efficiency. Track cycle time, task speed, and output per FTE. Build good habits.

- Walk: Add performance metrics: engagement, accuracy, reliability. Show that speed gains also lift outcomes.

- Run: Tie everything to financial and strategic impact: cost avoidance, revenue growth, customer impact. At this stage, ROI becomes part of budget planning.

A team shouldn’t advance until it proves stability in each phase: steady data, real improvement, and business impact.

Where this leads

When teams measure early signals, connect them to long-term value, and use those insights to shape how they work, AI stops being a science experiment. It becomes a real operating shift.

And the gap widens fast between teams who treat AI as a tool and those who treat it as a lever.

If you want to see how Jasper customers quantify these gains, the Total Economic Impact™ of Jasper study by Forrester breaks it down.

More of the latest & greatest

New Research: The State of AI in Marketing 2026

Trends from 1,400 marketers defining the operational era of AI.

January 28, 2026

|

Jasper Marketing

The Future of B2C Search Isn’t Keywords: Preparing for AI-Driven Discovery in 2026

Discover how B2C brands can win AI search in 2026 with AEO/GEO, trust signals, and structured content for Google, ChatGPT, Perplexity, and Amazon.

January 7, 2026

|

Megan Dubin

3 Ways to Optimize for Search at Scale in Jasper

Learn how Jasper helps enterprise marketing teams optimize for traditional and AI search at scale.

December 22, 2025

|

Mason Johnson